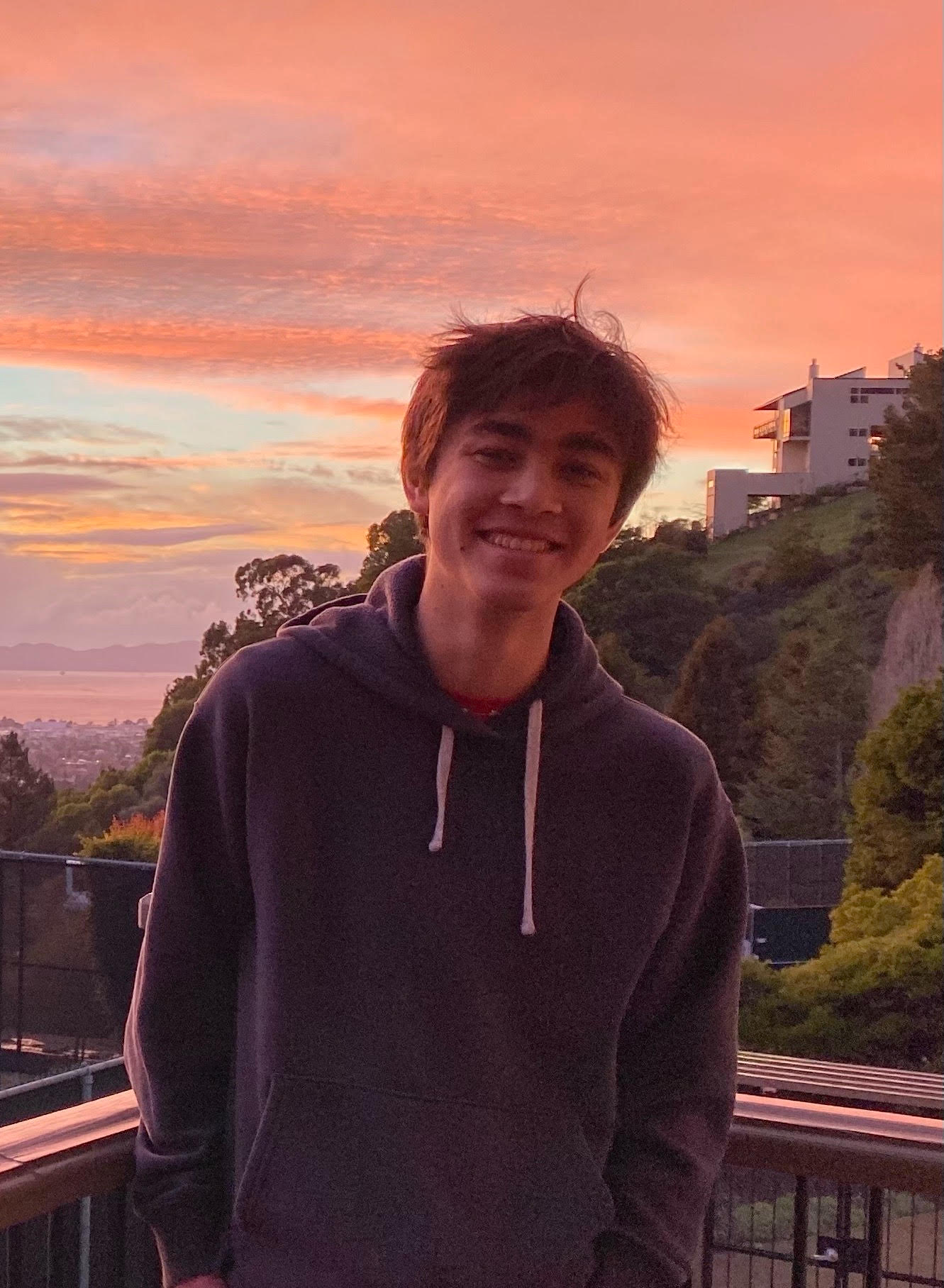

Meet Teo Cheng, a high school student who has been volunteering to lead development on the Open Book Genome Project. In 2021, Teo took Harvard’s online CS50 Intro to Computer Science to prepare for his AP Computer Science Principles exam. The following summer, he took MIT’s Introduction to Computer Science and Programming course. To put these learnings into practice and gain more hands-on experience, he searched for impactful opportunities within the non-profit Internet Archive, where his father Brenton Cheng runs the UX team. For the past year, Teo has been working closely with Mek, making improvements to the Open Book Genome Project Sequencer — a software robot that reads the Internet Archive’s publicly available books and derives public insights to enable greater access to their themes and data. Meet Teo!

Goals

This year, by joining the Open Book Genome Project team, I hoped to understand a piece of production software well enough to make meaningful contributions. Also, because this project may someday be run on every book digitized by the Internet Archive, I wanted to gain experience contributing to something which needs to have a high level of accuracy and runtime performance. When I joined the project, I learned of several problems. For example, the book sequencer module, which is responsible for deriving ngrams, was noisy and wasn’t honoring the defined stop words. Also, the page type detection would frequently break because it was too strict and wasn’t robust against OCR errors, punctuation, and variety in syntax. Furthermore, because I already have experience programming, I was interested in learning more about the engineering development process, such as using tools like git, writing tests, and running pipelines.

What I’ve Learned

So far while working on the Open Book Genome Project (OBGP) I’ve gained experience with the following 10 things: I learned how to use docker to install a project in a contained way without having to mess up my computer’s file system. I used ssh to run the OBGP pipeline on a more powerful remote computer. Because the internet connection could be disrupted, we did our work using a program called tmux which ensured our processes would continue running even if the connection between the client and server died. This remote computer ran Linux and so I needed to learn basic BASH commands. I also needed to learn about XML and JSON formats, and how those are used in the results of our pipeline. We used bash commands and regex (e.g. grep) to analyze the pipeline results, such as to extract URL counts from books. Some bash commands I used to discover link counts are: for loop, grep, variables, cat, wc. I worked on improving the existing OBGP Sequencer, so I had to learn how to read through and understand a new codebase. To submit our code changes, we used the git protocol and managed our tasks on GitHub.

Accomplishments

In addition to learning a lot throughout working on the Open Book Genome Project, I’ve accomplished a few different things. I noticed the issue with the Page Type Detector, which I solved. My improvement to the detector involved allowing regex patterns in addition to exact text matches. I also improved the ISBN detector to reduce false positives, which were happening pretty commonly. Lastly, I solved the bug with the stop words that get removed from the ngrams to make them less noisy and more useful. I also added more stop words to decrease the amount of clutter in the ngram results.

How it Works

As a developer on the Open Book Genome Project, here’s an inside look at what it’s like when staff members run the Sequencer on Internet Archive’s books:

- Set up the project using the Docker instructions

- On Archive.org, identify a search query which returns the books we want to sequence

- Create an AdvancedSearch query which returns identifiers for these books in JSON

- Reformat the results from this query and feed it into the Sequencer pipeline

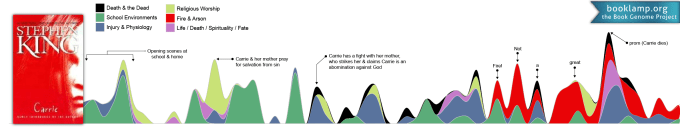

Here’s an example of a completed book_genome.json created by this process.

Want to try it yourself?

You can add your own processing modules too! If you’d like to try out the Open Book Genome Project Sequencer using just your browser, you can try it using the OBGP google colab.

Learn More

Want to learn more about the Open Book Genome Project? Check out the official bookgenomeproject.org website, Open Library’s announcement of the project, and learn about the work of Nolan Windham who previously led development on the project as a high school senior and incoming college freshman as part of Google Summer of Code.

Want to contribute?

Come volunteer to be an Open Library or Open Book Genome Project fellow!